Spark of Brilliance: Smart Automation with LLMs and Generative AI

- March 1, 2024

- Posted by: Kavitha V Amara

- Category: Quality Engineering

What is your vision for the Quality Assurance (QA) field, let us say, a decade down?

Well, for the first time ever, end-user experience is identified as the primary goal of QA and software testing strategy in the World Quality Report (WQR) 2018–2023. Software testing engineers used to scrawl lines of code, but the beginning of automation testing gave them a shocking sense of ease. Quality assurance, or QA, is essential to developing software applications. It is a tedious task to run quality testing on software applications.

By 2019 or 2020, QA had developed numerous new add-ons to keep pace with the rapid evolution of technology. The industry also requires broader Artificial Intelligence (AI) skills to complement these advancements. Describing the pace of technological advancement as tremendous would be an understatement. This pressure compelled the QA industry to advance and incorporate innovative technologies into processes, aiming to maximize customer satisfaction and align with the findings of the WQR.

Traditional test automation will not be able to fulfill the demands of AI, intelligent DevOps, IoT, and immersive advanced needs as more and more “smarter” and “intelligent” products flood the market. Because of this, test engineers must adapt their test approaches. Immersion technologies such as virtual reality (VR) will become more commonplace and incorporated into products and ecosystems built on AI and IoT. In addition to new tools, the QA domain requires new methods and techniques. Codeless/no-code platforms, distributed ledgers, serverless architecture, edge computing, and containers-based apps are just a few of the innovations that may affect QA testing procedures.

Thus, QA will advance up the Agile value chain along with AI in the upcoming years, necessitating a mentality and cultural transformation. Properly combining individuals, resources, methods, cultures, and habits will be essential. In fact, quality assurance will always be inventive.

I will explore more pressing issues surrounding QA’s future and generative AI testing in this article.

Examples:

- How will the frameworks for test automation look?

- How will testing tools evolve to satisfy the QA requirements of AI and minor on GEN AI and LLMs?

- Testing software prior to generative AI

- Software Testing Following Generative AI

- Leveraging Generative AI for Specialized Testing

- Limitations of Generative AI Testing

Artificial Intelligence: The Emergence of Products

AI has disrupted industries and businesses ever since it arrived and continues to do so. The “next big thing” in the automotive business is autonomous vehicles, and ML-powered diagnostic equipment are becoming increasingly common in the healthcare sector. The market is witnessing a surge in intelligent products that surpass their fundamental purposes, ranging from AI-powered software for global security to “intelligent” decision-making. QA will face additional difficulties in adequately evaluating these applications (products?) as deep learning, neural networks, and artificial intelligence become more predominant.

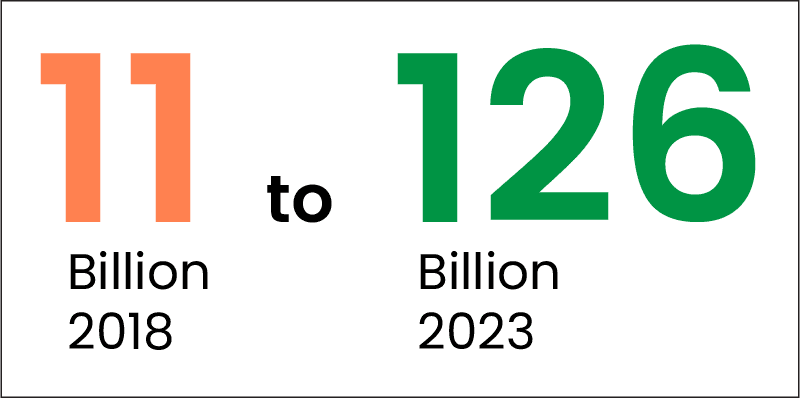

Global Market Prediction for AI Software Revenue

This market will continue to expand rapidly. According to a Tractica analysis, the demand for AI software will experience significant development by 2025. Over the following five years, yearly global revenue rise from $11 billion in 2018 to $126 billion by 2023. The market will be overrun by items with cognitive characteristics that AI and ML drive in the next ten years.

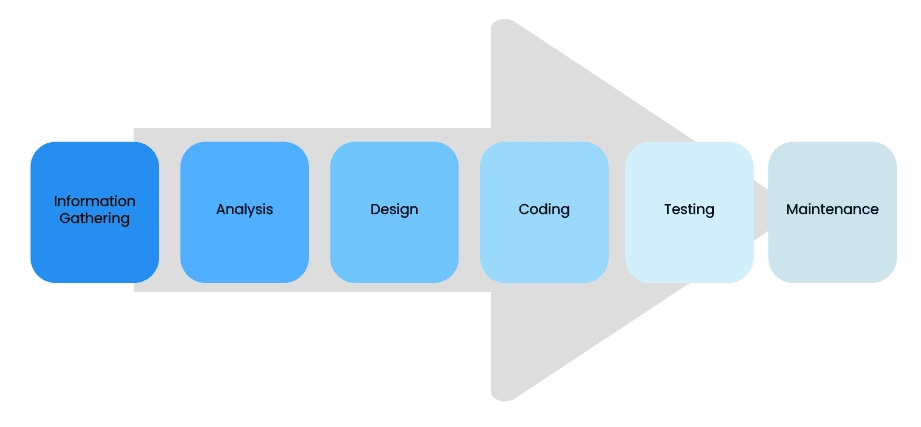

Before we hit our actual topic, we will discuss the software development life cycle (SDLC).

From requirement gathering to testing, it is a crucial phase for organizations. Testing has become an automated process, and agile testing reduces the software development life cycle (SDLC) duration to two to three weeks. Continuous test automation combines speed and accuracy to produce the best results. With the widespread adoption of digital transformation, real-time testing through intelligent algorithms will be incorporated into continuous testing, significantly cutting down on the SDLC.

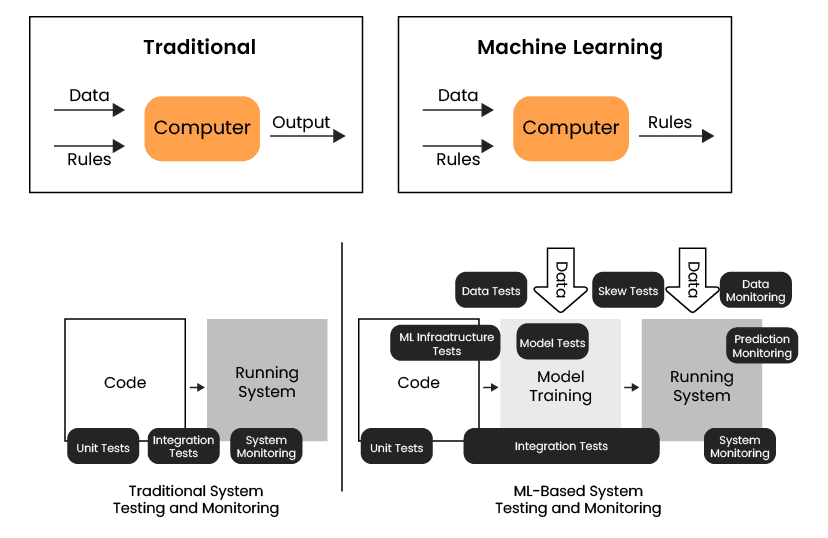

The rise of AI raises questions about its impact on Quality Assurance (QA), testing tools, and test automation. Traditionally, applications follow deterministic logic, ensuring a predictable output for a given input. In contrast, AI-based models operate on probabilistic logic, introducing unpredictability in the output for a specific input. The output of an AI-based model is liable on its training, adding complexity to AI testing. Engineers may understand how to build/train an AI model but comprehending its internal workings for output prediction poses a challenge. While the concept of AI is not new to us, Generative AI is the notable change, leading in an exciting revolution in how AI is applied.

Why are Generative AI and LLMs required for Software Testing?

Software testing plays a key role in the development process. Yet, developers often face challenges conducting thorough testing due to time and resource constraints. In such scenarios, there arises a need for a system capable of intelligently identifying areas requiring detailed and focused attention, differentiating them from aspects amenable to automation based on repetitive patterns.

The latest advancements in generative artificial intelligence (AI) and large language models (LLMs) are raising the standards for software testing. Generative AI-based LLMs offer increased accuracy and quality in less time than traditional automation testing methods, as demonstrated by their recent effectiveness in producing flawless software products. Intelligent automation using AI, Generative AI, and Large Language Models (LLMs) is a transformative technology that achieves top-notch performance in natural language processing tasks. General Computer Automation using Large Language Models has made considerable progress, aiming to create an intelligent agent for automating computer tasks through large language models. However, the modular architecture includes components for conversational intelligence, document handling, and application control, with OpenAI’s GPT-3 integrated for natural language capabilities. The rapid advancements in LLMs and generative AI and the emergence of LLM-based AI agent frameworks bring fresh difficulties and chances for more study. A new breed of AI-based agents has entered the process automation space, allowing for completing complex jobs.

Software testing before Generative AI

Creating Test Cases: Test cases are comprehensive descriptions that outline quality parameters, such as quality requirements, test conditions, and quality thresholds, to assess the software product/application.

Manual Execution: Test engineers execute quality tests as specified in the test case, verifying the results against the quality parameters documented in the test case.

Regression Testing: There is an inherent conflict between new and old code, where new code may introduce flaws, leading to the failure of quality compliances. Regression testing is routinely applied to any test created during the initial release of a product and subsequently executed during subsequent releases.

Exploratory Testing: Also known as “ad-hoc” testing, this approach empowers test engineers to identify flaws without strictly adhering to predefined test cases. While test cases guide where to look for issues, they may not encompass all potential bugs. Exploratory testing allows testers to leverage their direct experience to identify bugs in the product/application.

Performance Testing: This type of testing evaluates the robust performance of the product/application under heavy-duty conditions. When subjected to a significant workload, it ensures responsiveness, speed, and agility.

Security Testing: This test aims to identify potential hazards, flaws, and vulnerabilities. It assesses how well the program safeguards against resource and data loss, damage, and unauthorized access.

Software Testing with Generative AI

Test Data Generation: Test engineers require diverse test data in various formats. Generative AI-powered Large Language Models (LLMs) dynamically generate data in all required formats. For instance, Hugging Face’s LLMs, trained in various computer languages, can produce data for operational testing in any language. OpenAI’s capabilities extend to generating JSON payloads compatible with Visual Studio Code and the Anaconda environment.

Test Case Generation: Test case generation involves creating diverse scenarios to verify if the software operates according to quality standards. Orca, a Microsoft-powered LLM, and Llama-code, a meta-powered LLM, can generate, design, analyze, execute, and produce reports on identified defects. Generative AI techniques enhance efficiency, automating the generation of test cases based on predefined criteria.

Effective test case generation is crucial for ensuring software’s reliability, functionality, and quality, contributing to the successful delivery of error-free products. In addition to generating new test cases, it is imperative to recognize the significance of optimizing existing test suites, particularly in large-scale legacy systems. Incorporating Generative AI solutions can play a pivotal role in streamlining and enhancing the efficiency of these established test suites.

Generative AI techniques can analyze and refine the existing test suite, identifying redundant or outdated test cases. By leveraging machine learning algorithms, it can prioritize critical test scenarios, ensuring comprehensive coverage while reducing the overall testing effort. This optimization process is essential for maintaining the relevance and effectiveness of test suites over time.

Moreover, beyond generating new test cases, integrating Generative AI into the testing process facilitates the optimization of large-scale legacy test suites, contributing to a smoother transition during vendor replacements and ensuring continued software reliability and quality.

Regression Analysis: Initial research involves examining criteria for testing, such as testing plans and product alterations. This step ensures well-prepared and effective automated testing. Regression analysis automates tasks, plans, scripts, and workflows, capturing and mapping users’ journeys across real-time applications. This technique assists in constructing a roadmap for the testing team.

Test Closure and Defect Reporting: Generative AI simplifies reporting, producing visually appealing spreadsheets with test findings. It calculates and displays reports graphically, creates test summaries, and is a personal assistant. It compiles comprehensive test documents that effectively communicate findings.

Test Coverage Assessment: LLMs can scrutinize code and identify sections lacking coverage from current test cases, ensuring a thorough and comprehensive testing strategy.

Tailoring Test Scenarios through Prompt Engineering: LLMs can be refined via prompt engineering techniques to generate test scenarios that are more specific and pertinent to the software’s domain, enhancing the relevance and effectiveness of the testing process.

Continuous Integration/Continuous Deployment: Incorporating Language Model Models (LLMs) into Continuous Integration/Continuous Deployment (CI/CD) pipelines enables the delivery of immediate insights regarding potential defects, test coverage, and other relevant metrics.

Leveraging Generative AI for Specialized Testing

Accessibility Testing and Compatibility Testing: Generative AI, powered by models like Orca and Llama-code, extend its capabilities beyond traditional test case generation. These advanced systems are adept at performing specialized testing types such as accessibility testing and compatibility testing. They can simulate diverse user interactions, ensuring that software meets quality standards and is accessible to users with varying needs. Additionally, compatibility testing across different environments, devices, and platforms is streamlined, contributing to a more robust and versatile product.

Test Metrics Optimization & Outcome Measurement and Continuous Improvement

Beyond the execution of tests, the actual value of Generative AI emerges in optimizing the outcome measurement process. These systems can analyze vast testing data sets by employing machine learning algorithms to derive meaningful test metrics. This optimization includes the identification of key performance indicators (KPIs), defect density, and overall testing efficiency. The automated analysis enhances the accuracy of metrics and provides actionable insights for continuous improvement.

Generative AI facilitates a comprehensive approach to outcome measurement, ensuring that testing activities translate into tangible insights. Key test metrics, such as test coverage, defect detection rate, and time-to-resolution, are meticulously tracked and analyzed. This data-driven approach enables teams to make informed decisions, optimize testing strategies, and drive continuous improvement initiatives. As a result, the integration of Generative AI improves testing efficiency and contributes to the overall enhancement of software quality throughout the development lifecycle.

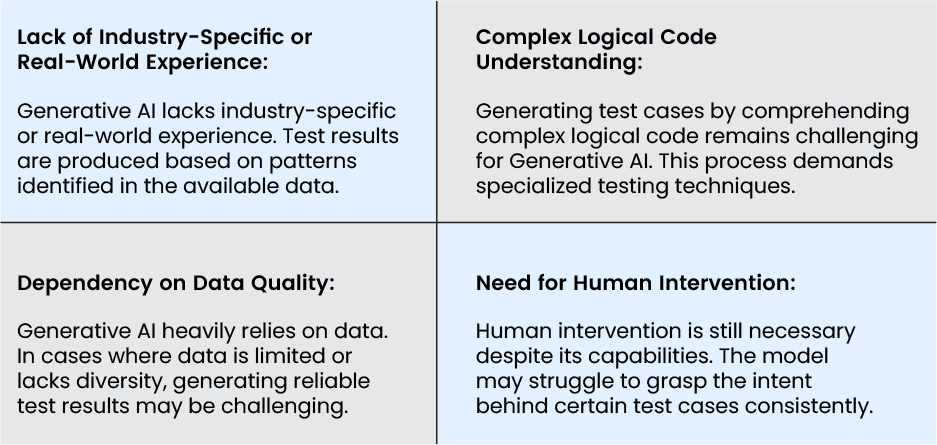

Limitations of Generative AI Testing

Generative AI excels in understanding, analyzing, and executing the entire test life cycle for software testing, yet it has notable limitations:

Why indium

Indium Software offers a range of specialized services that capitalize on the capabilities of Artificial Intelligence (AI), Machine Learning (ML), and Generation AI (Gen AI) throughout the testing life cycle. One of our prominent services is AI-powered Test Automation, which leverages advanced algorithms and machine learning models to create efficient and scalable automated testing frameworks. This service ensures faster test execution, reduced manual intervention, and increased test coverage, improving software quality. You can explore more about our AI-powered Test Automation service here.

To know more about Indium’s Gen AI Testing capabilities, visit

Organizations engaged in software product and application development can leverage the integration of Generative AI and Large Language Models (LLMs) for software testing. With minimal human intervention, Generative AI enables the production of high-performance and high-quality applications. It has the capability to generate test cases in any programming language, fostering collaboration among software testers and cross-functional teams. In the current technological era, Generative AI and LLMs are strategic enablers for quality assurance and digital assurance. Gratitude goes to Generative AI and LLMs for this advancement.